Building a strong online community can do wonders for the success of your business and brand. It offers a space to develop meaningful relationships with members, can build brand loyalty, can spark new business streams, and allows like-minded members to connect in a safe and trusted space.

Maintaining this sense of trust and safety is essential to the ongoing success of any online community and ensuring your space is free from offensive and inappropriate images and videos is key to delivering this sense of security.

While many online communities go to great lengths to communicate terms of engagement, encourage community members to flag questionable content, and employ a team of moderators to review and manage posts, the sheer volume of content generated in these spaces means members can still be exposed to offensive material. This is especially true if the group is reliant solely on human moderators to monitor every image, video, and comment uploaded online. And while employing more human moderators can help, it’s costly and forever prone to human error.

The best solution for ensuring ongoing trust and safety within your online community is a content management model that includes automation, moderation, and community feedback.

Automation: Harnessing the power of Artificial Intelligence (AI)

Automation: Harnessing the power of Artificial Intelligence (AI)

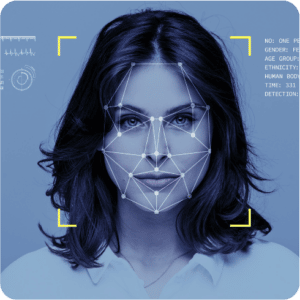

Image Analyzer’s AI-powered image recognition technology detects visual threats i.e. images and videos and streaming media. By recognizing harmful material, such as pornography, extremism, and graphic violence, our unique content moderation software can help your organization to enforce your terms of service and reduce the risk of your community members being exposed to harmful material.

Our technology will quickly and accurately analyze every image or video uploaded to your site and will assign a risk score to each piece of content based on its likelihood of containing specific types of objectionable material.

If bad content is detected, our tool will immediately remove it from your community platform. The advanced AI in our content moderation software delivers unparalleled accuracy – with near zero false positives (content the tool misinterprets as being bad) – all in a matter of milliseconds. While other labelling technologies can be general and struggle to identify specific visual threats, ours has been designed to constantly improve the accuracy of core visual threat categories – not general everyday objects.

Moderation: Maintaining the human touch

Moderation: Maintaining the human touch

Key to the success of our AI-powered technology is its ability to support the role of human moderators, rather than threaten to replace them. At times, the AI will have doubts as to whether certain content warrants removal, so will seek validation from human team members. Before doing this, the AI will group any challenging content into categories and sort it by risk, meaning only those moderators trained to review certain types of content can access it.

This hybrid solution ensures the human moderation team is focussing on the right types of challenging content; protecting the wellbeing of moderators, whilst boosting trust and safety within your community and safeguarding the reputation of your brand.

Community Feedback: Staying connected with your audience

Community Feedback: Staying connected with your audience

While a combination of automation and moderation will be considerably more effective at detecting offensive material than human moderators alone, ensuring your community members have the tools to flag any missed content remains key to maintaining trust, safety, and engagement in your online forum. Image

Analyzer’s technology includes channels through which community members can raise concerns to the moderating team, empowering individuals with a voice and keeping your business connected to the issues that they are most concerned about.

If you’re interested in learning more about how Image Analyzer can automate a large portion of your moderation requirements and improve the trust and safety in your online community, please get in touch with us.